This article primarily focusses on Computer Vision and Diffusion models.

Real World Applications

- Video/Image Restoration

- Take an old video or photo that is low quality or blurred and improve it using DL.

- Image Editing and Synthesis using text commands:

- “Make my smile wider” - Text suggested edits

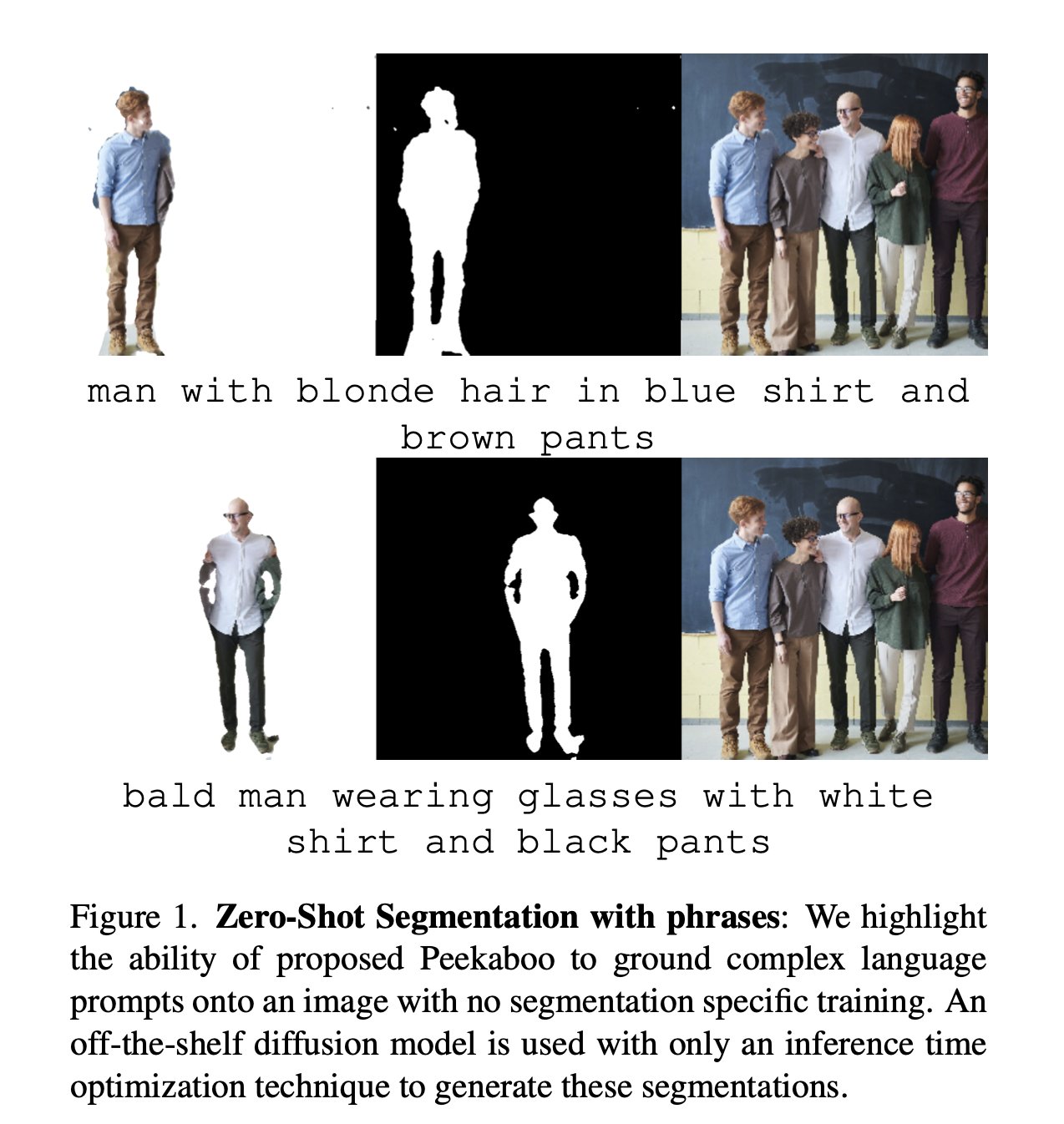

- “segment image of guy wearing blue shirt and brown pants from an image”

- Text to speech Synthesis

- Here is a good summary of TTS algorithms from aiSummer School

- Speech to text

- OpenAI’s whisper

- Audio Generation

- Code synthesis

- Generating Fakes (Photo’s, Videos, Personas)

- This is bread and butter for Generative algorithms

ML Applications

- Text guided image generation also referred as Classifier Guidance

- In-Painting: This refers to the process of filling in missing or corrupted parts of an image or video with plausible content. Generative models, such as Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs), can be trained to learn the underlying distribution of the data, and can then be used to generate new content that is consistent with the surrounding area.

- Style Transfer: This is the process of applying the style of one image to another image, while preserving the content of the original image. This is typically done by training a generative model to separate the style and content representations of an image, and then recombining the content of one image with the style of another image.

- Upscaling Images:

- Super-resolution: This refers to the process of increasing the resolution of an image. Generative models, such as GANs, can be trained to learn the mapping from low-resolution images to high-resolution images.

- Few Shot Learning:

- Neural Network Pre-Training: This refers to the process of training a generative model on a large dataset, and then using the learned representations as a starting point for fine-tuning on a smaller dataset. This can be useful when the amount of labeled data is limited, as the pre-trained model can provide a good initialization that allows the model to quickly converge to a good solution when fine-tuning on the smaller dataset.

- Reinforcement Learning Exploration: Generative models can be used in Reinforcement Learning (RL) to help improve exploration. For example, a GAN can be trained to generate new samples that are similar to existing samples in the training data, but with slight variations. These generated samples can then be used to expand the state space of the RL agent, allowing it to explore and learn from a wider range of scenarios.

Methods & Approach

- Diffusion Models

- VAE’s

- GAN’s

- Normalizing flows and Autoregressive models

- VAE’s with flows and autoregressive models

- Transformers based language generators

Techniques

- Clip for multi-modal

- Prompt Engg, Chain of thought prompting

- Reinforcing behavior based on human feedback RHLF

- Stable Diffusion: Combine superpowers of VAE’s and Diffusion models to make things faster

- Super-resolution: Guided Diffusion model trained on Large resolution with guidance on the small resolution image

- Cascaded Diffusion Models: A small resolution text conditioned/class conditioned diffusion model chained with multiple super resolution images

Cascaded Diffusion Models

Cascaded Diffusion Models - Textual Inversion

Tools

- Codex by OpenAI

- Perpexity AI BirdSQL

- CoPilot

- ChatGPT

- …

Blogs

References

- Quidgest article on Generative AI:

- Industry impact and predictions about generative AI

- Applications in the industry

- Canary Mail

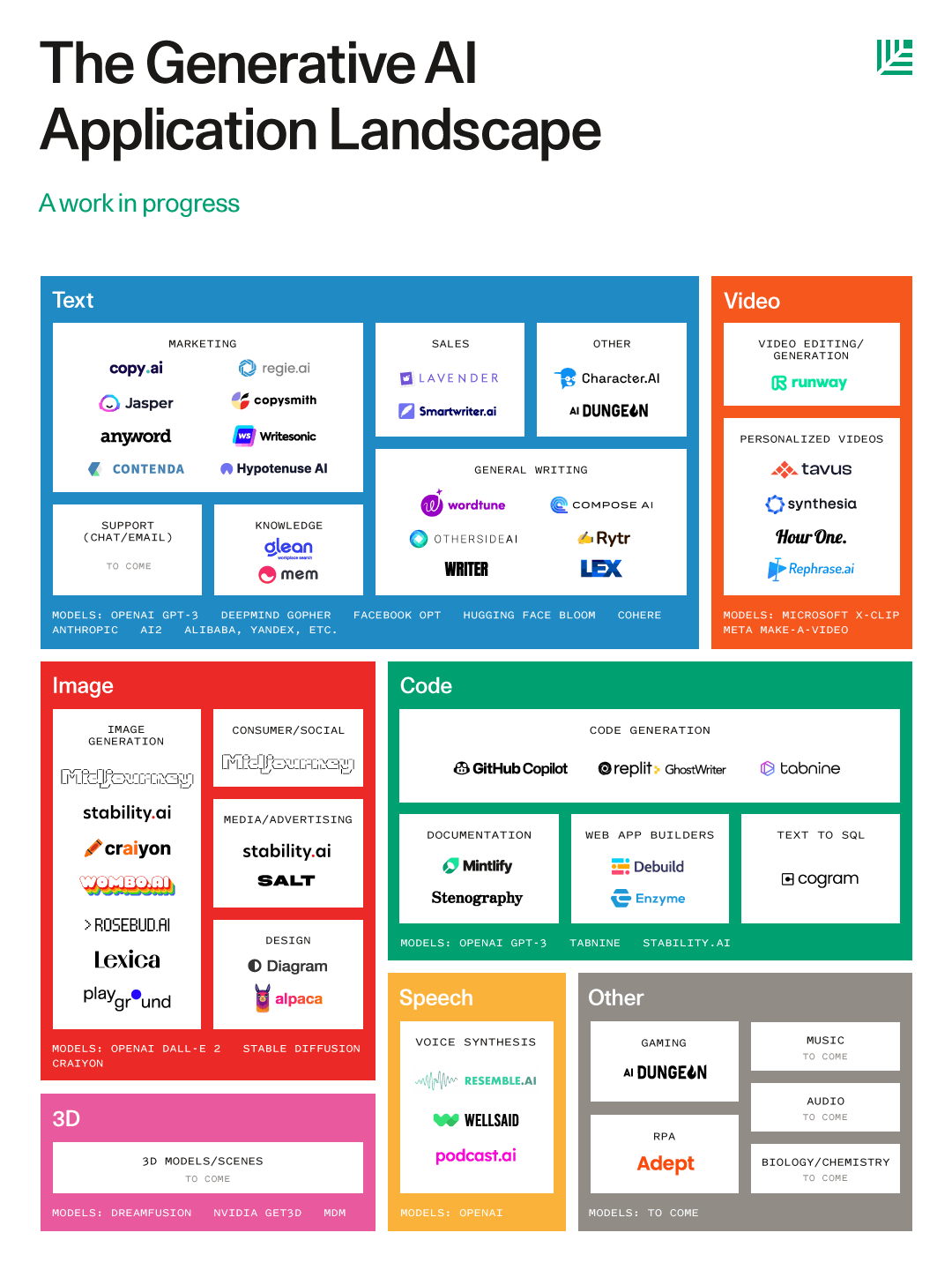

Companies

- Companies in Generative AI

- Topaz: Image and Video Editing with AI

- Quidgest: Genio, coding with AI

- replit.com

Want to connect? Reach out @varuntul22.